Sep 22, 2025

Introduction

The artificial intelligence landscape continues its evolution, and OpenAI’s latest release—GPT-5—represents a significant milestone in conversational AI capabilities. With over two years having passed since we first explored the fundamentals of calling ChatGPT models via API, the release of GPT-5 presents the perfect opportunity to revisit and master the enhanced features that have emerged. This comprehensive guide will walk you through everything you need to know about GPT-5’s API, from basic setup to advanced implementation techniques.

As we’ll discover throughout this guide, GPT-5 introduces sophisticated features like enhanced reasoning capabilities, dynamic verbosity controls, and powerful custom tool integrations, but it also brings increased complexity that demands a deeper understanding of the underlying systems. As usual, you can follow along with the video here or the written guide below.

What’s New in GPT-5

Highlights

Enhanced Reasoning & Coding Skills

Dynamic Rotor Approach with intelligent model distribution per task

Larger context windows

Efficiency and Infrastructure Advancements

Swift Responsive Fixes from OpenAI

Adjustments to Tone & Personality

Lowlights

Emotional Disconnection & Cold Tone

Poor Rollout Experience & Feature Removal

Underwhelming Improvement Perceived

Trust & Reliability Gaps

Increased Complexity for API Calls

The Law of Increasing Complexity

Throughout the technology industry, platforms inevitably increase in complexity as they mature and add capabilities. GPT-5 exemplifies this trend like mobile development's evolution from Java to Kotlin or Objective-C to Swift, offering unprecedented AI capabilities while introducing layers of complexity that can overwhelm newcomers. OpenAI's accelerating pace of feature additions means what once required a weekend to master now demands continuous learning, emphasizing the importance of getting involved early in the learning curve.

Key Changes

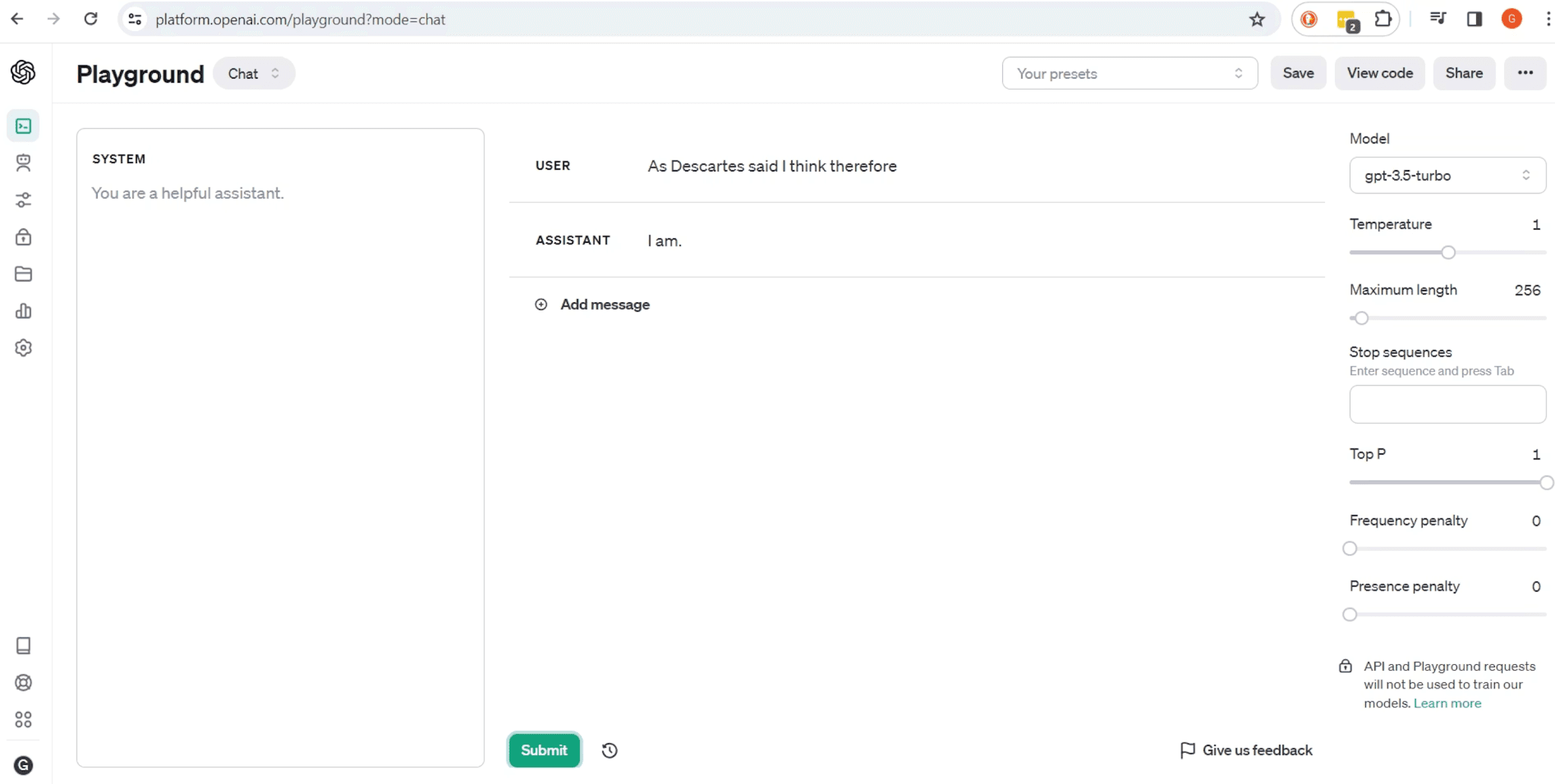

Here below is what the Playground used to look like for GPT 3.5:

Let’s start with a fundamental example that demonstrates the core differences between GPT-3.5 and GPT-5 API calls. The evolution in API design reflects both increased sophistication and improved developer experience.

GPT-3.5 Code Example

To help illustrate the differences between 3.5 and 5, take a look at this example from a traditional GPT 3.5 call:

The parameters at the bottom of the example represent ways to refine the possible output response.

temperaturecontrols the level of variation in responses. If set to 0 you will get the same response every time.top_pcontrols what percentage of the best possible responses will it be pulling its answer frofrequency_penaltypositive or negative values would decrease or increase token appearance respectively if a word appeared 3 times or morepresence_penaltypositive or negative values would decrease or increase token appearance respectively if a word appeared at all

Despite what you may have heard in the news, if you run the code today, it will still ping 3.5 and as expected it should return the phrase “I am,” completing Descartes’ famous statement.

GPT-5 Enhanced Implementation

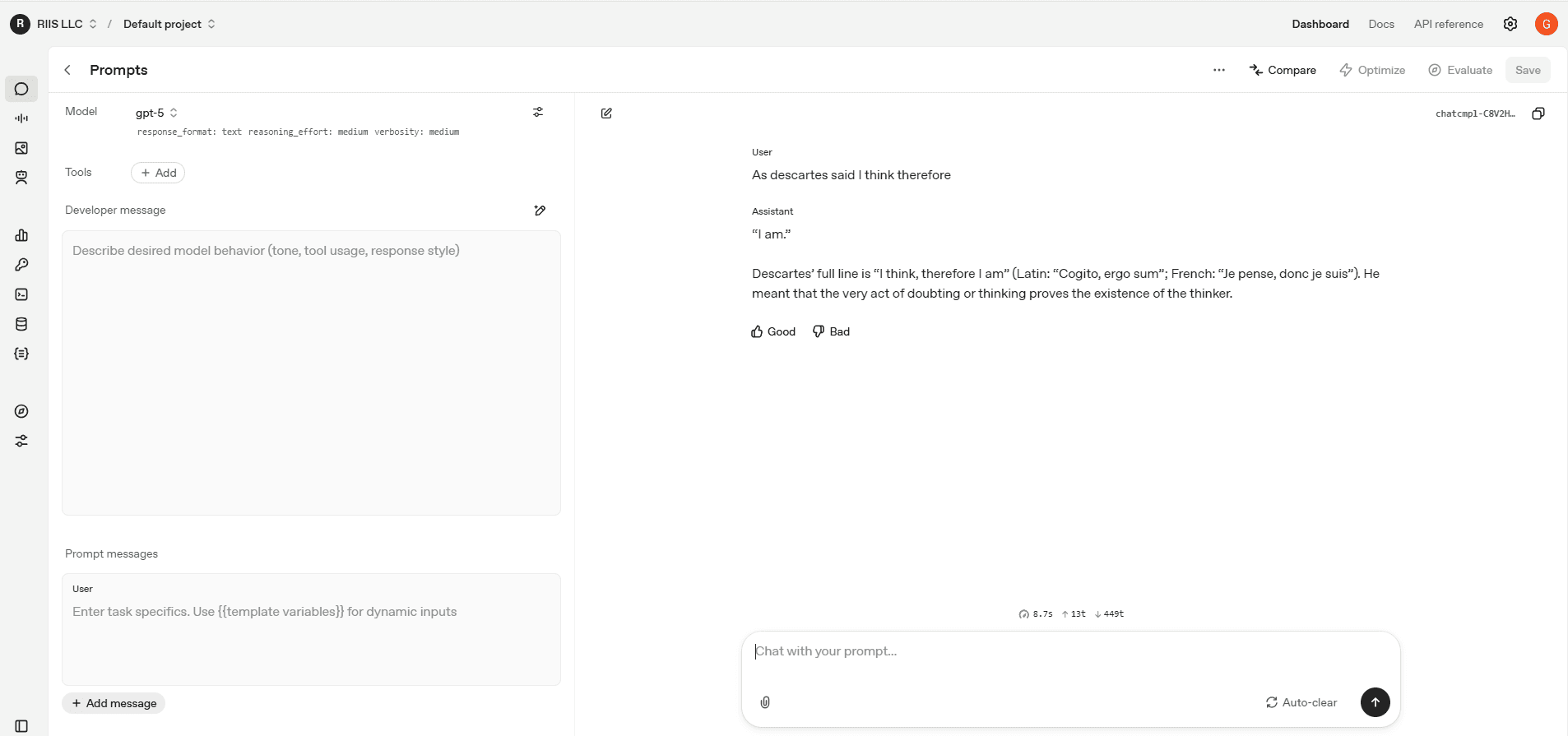

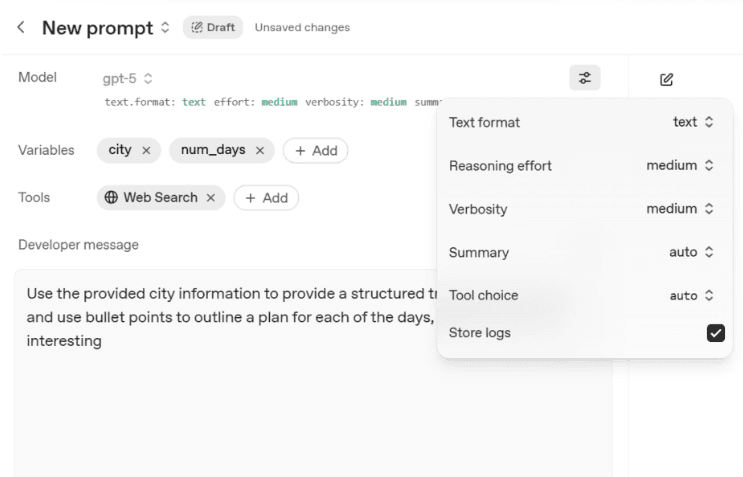

Now in GPT 5, the layout has shifted significantly. Model parameters now rest on the left side, System has been replaced with Developer message (they are mostly the same, so don’t worry), and additional Tools can be added with a simple button click or two. They include things like web search, create image, or connect to an MCP.

You’ll notice the temperature ,top_p , and penalty options have been removed. They’ve been replaced with options that have a clearer impact on the output which you will see in an example below.

If you click on the three dots in the top right corner, you will get the code for your call, which should look something like the following depending on your configuration:

The key differences are immediately apparent: the role designation has changed from “system” to “developer,” and two new parameters, verbosity and reasoning_effort, provide fine-grained control over response characteristics.

If you run the code you should get his response:

Perhaps we will have to turn down the verbosity a little bit ;).

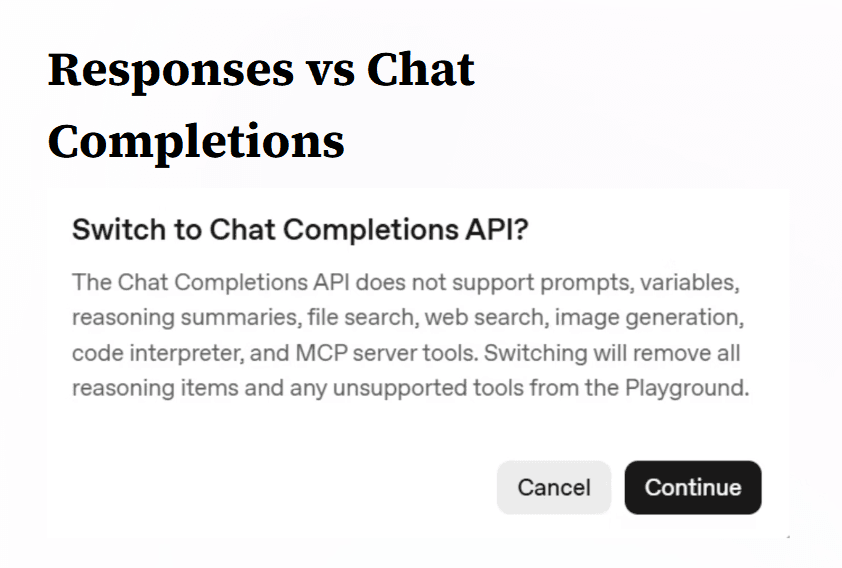

Responses vs Chat Completions: A Critical Architectural Decision

GPT-5 introduces a significant architectural choice between two communication patterns: chat completions and responses. This decision has far-reaching implications for application design and functionality.

Chat Completions: The Traditional Approach

The chat completions API maintains compatibility with existing applications but lacks access to many GPT-5’s advanced features. Most notably, it doesn’t support the reasoning capabilities that make GPT-5 distinctive, nor does it provide access to the expanded tool ecosystem.

Responses API: The Future-Forward Choice

The responses API offers several crucial advantages. First, it provides access to GPT-5’s complete feature set, including advanced reasoning capabilities and the full tool ecosystem. Second, and perhaps more importantly, it offers stateful conversation management through the store=True parameter. This eliminates the complex conversation history management that developers had to implement manually with chat completions.

Here’s what we get when we run the responses API for the same input as before:

That may be the clearest answer yet, but don’t let that deceive you. Tweaking the parameters for either API can get you similar answers for information as widely available as this, but the Responses API will open up many more tools to you and is the forward-looking option.

Taking 5 for a Test Ride

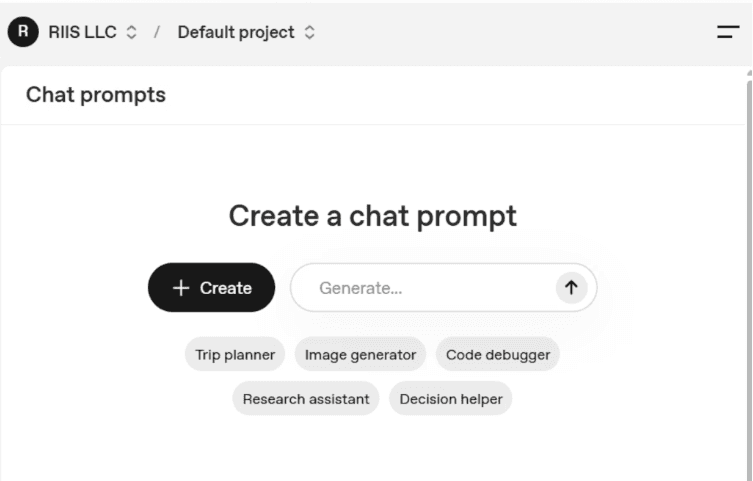

OpenAI API Integration for Dublin Trip Planning with GPT-5

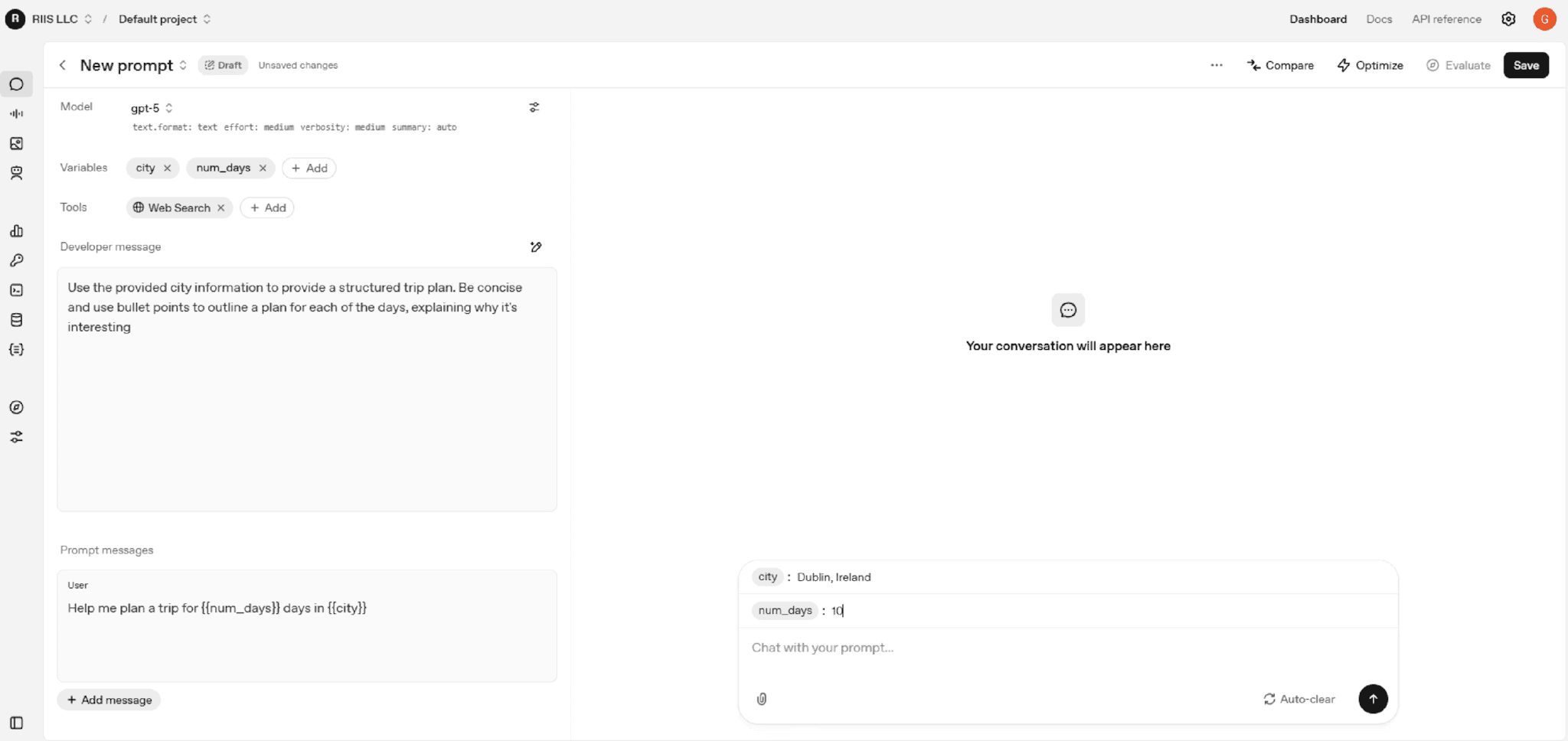

GPT-5 gives us some great example prompts to learn its nuances. Let’s click on that (Trip Planner) option:

Pressing that will automatically add the variables city and num_days to our Variables and Web Search to our tools.

If you click on the filter icon to the right of the model, you can see our options for all of the parameters in our example call like Reasoning Effort, Verbosity , and so on.

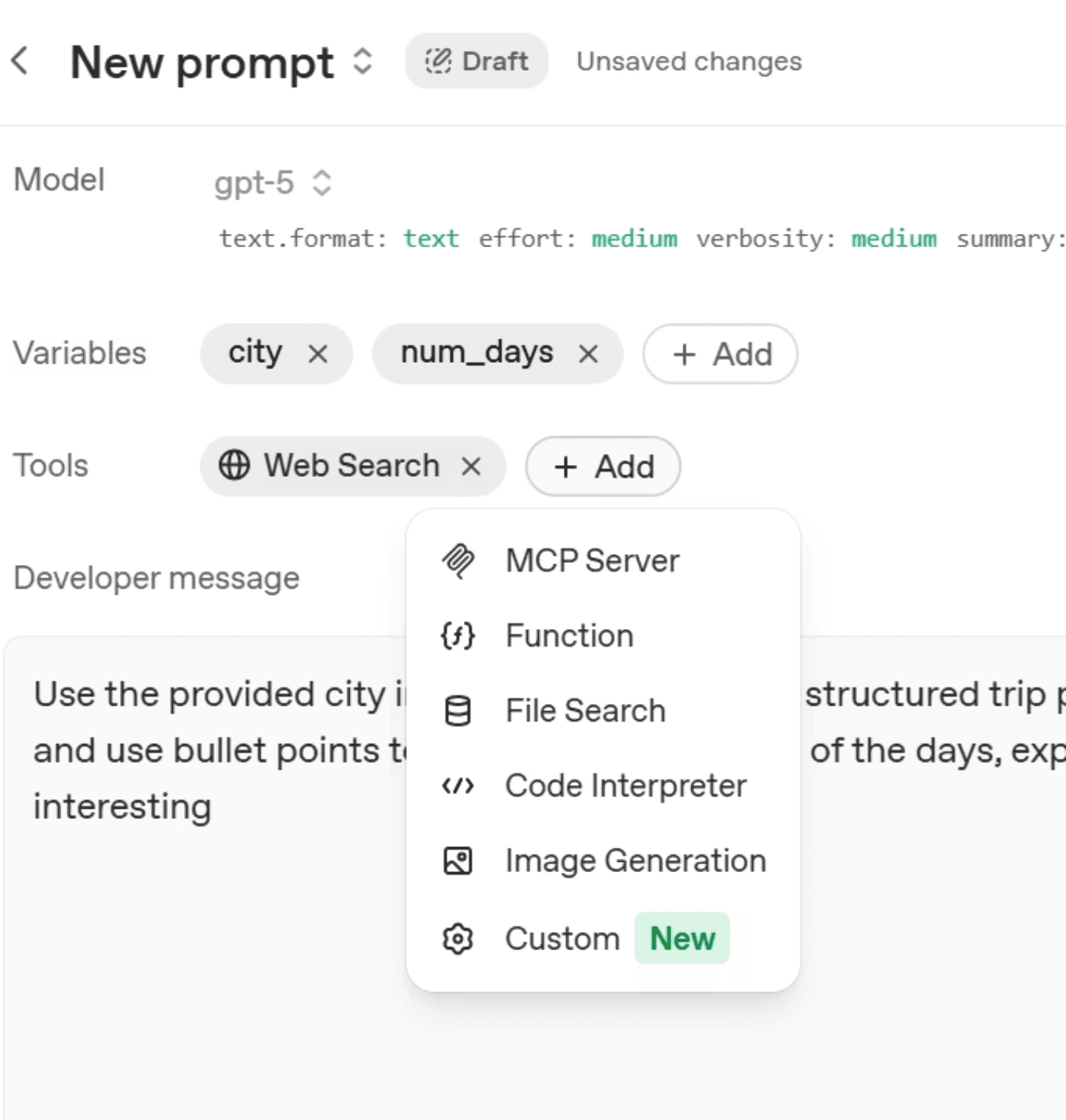

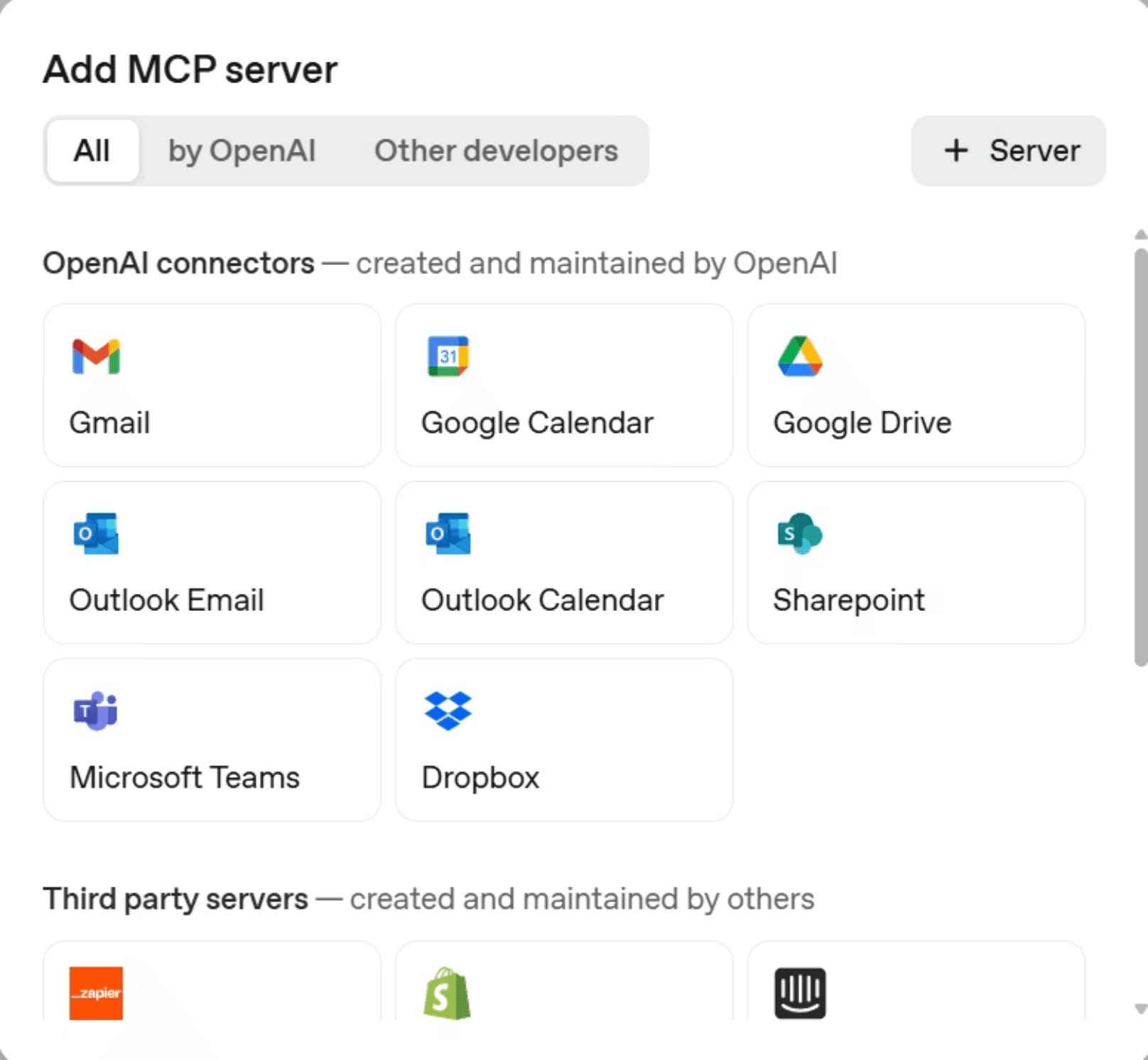

Say you wanted to add another tool to this call. Click on that (+Add) button in the tool row and you will get a list of options.

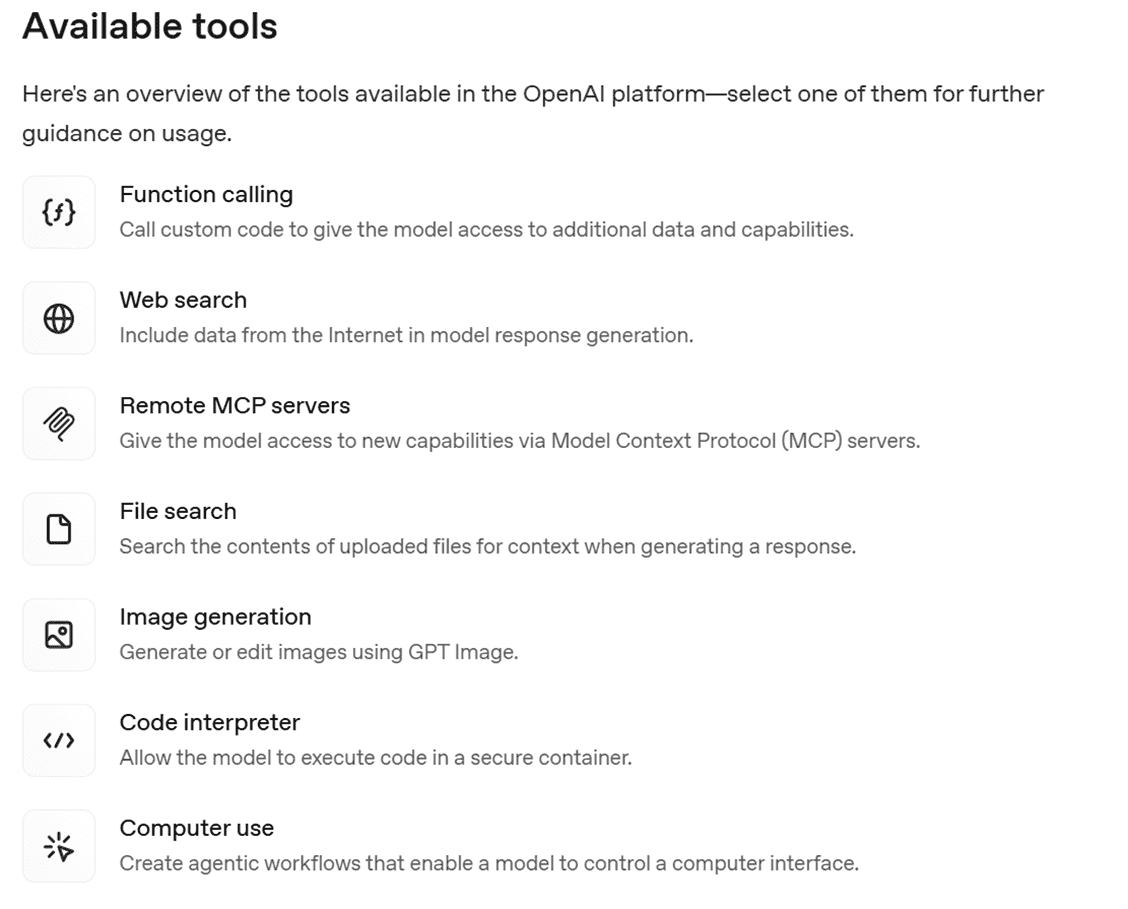

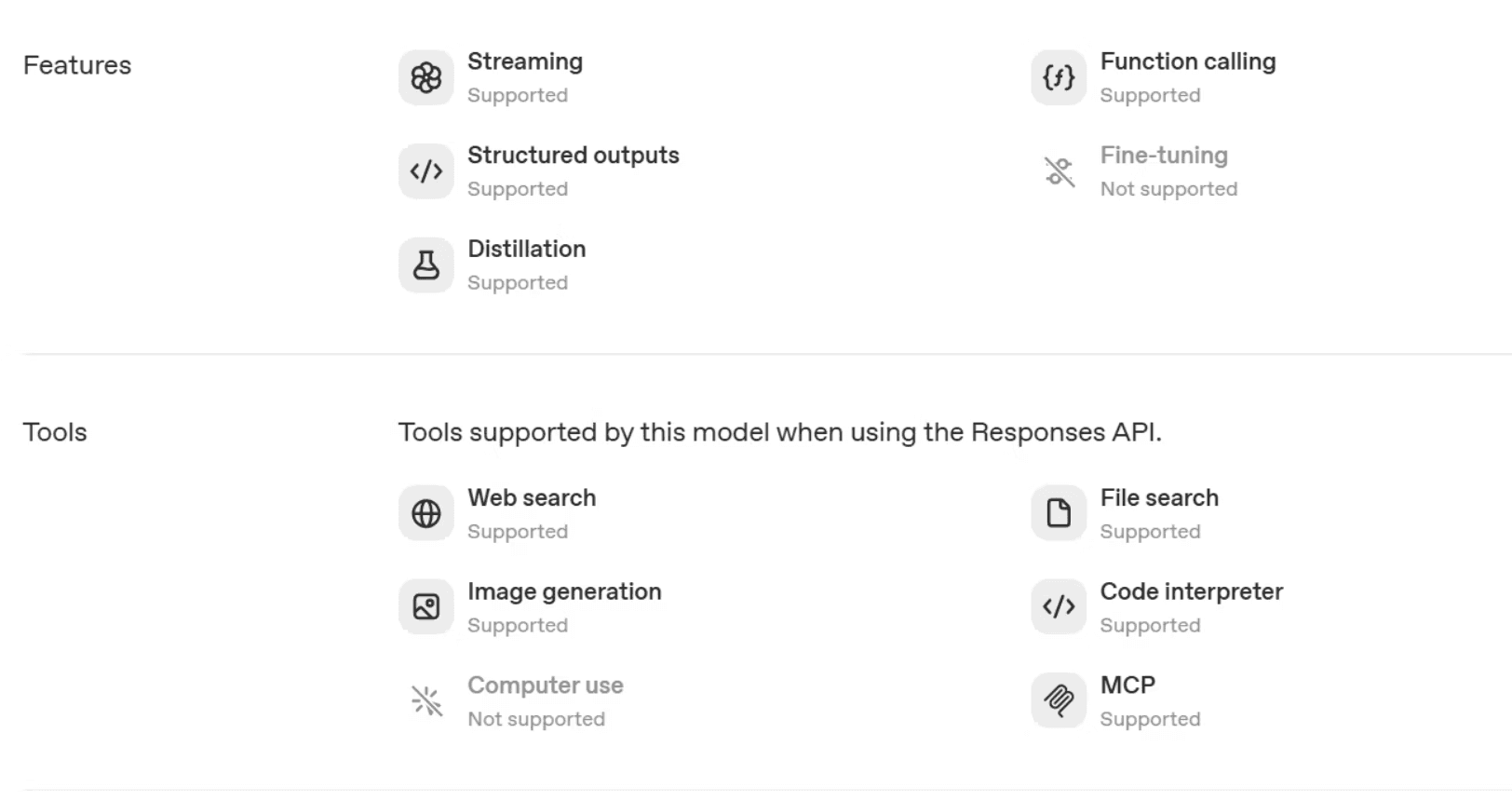

Take note of that Custom option at the bottom. We will get back to that later. For a full look at how you can utilize all the tools available we suggest looking at the documentation, but this image gives you a brief description of each for now:

Let’s give our Trip Planner a spin. We ware going to set our city to Dublin and the number of days to 5 in the variables section above the chat interface on the bottom right.

Our test should yield something like the following:

Great, now click on the three dots again to grab our code, which should look like this block below:

This example demonstrates several key GPT-5 capabilities working in concert. The web search tool automatically activates when the model determines it needs current information about Dublin attractions, accommodations, and events. The reasoning effort is set to “low” for faster response times, while the verbosity remains at “medium” to provide detailed daily itineraries with explanations.

The resulting output provides a structured 5-day Dublin itinerary with daily recommendations, explanations of why each attraction is noteworthy, and practical considerations for travelers. This level of comprehensive, real-time planning would have required multiple API calls and manual coordination with previous model versions.

A More Complete Overview of the New Features

GPT-5 introduces several groundbreaking capabilities that significantly expand what’s possible with AI-powered applications. These features represent not just incremental improvements but fundamental advances in how we can interact with and leverage large language models.

Verbosity Parameter: Precision Control Over Output Length

The verbosity parameter provides three distinct levels of output control, each tailored for specific use cases. Low verbosity generates approximately 560 tokens, ideal for concise responses, quick answers, or applications where brevity is essential. Medium verbosity produces around 850 tokens, offering balanced detail suitable for most interactive applications. High verbosity can generate up to 1,300 tokens or more, perfect for comprehensive documentation, detailed explanations, or educational content.

This parameter proves particularly valuable in code generation scenarios. Low verbosity produces minimal, functional code with no extraneous comments—perfect for experienced developers who understand the context. Medium verbosity adds helpful comments and basic documentation, while high verbosity generates production-ready code with comprehensive comments, error handling, and best practice recommendations.

Freeform Function Calling: Breaking Traditional API Boundaries

One of GPT-5’s most innovative features is freeform function calling, which allows the model to generate and execute arbitrary code, queries, and configurations. This capability transcends traditional API limitations by enabling direct text payload generation to custom tools.

The implementation revolves around defining custom tools with specific purposes, then allowing GPT-5 to determine how to interact with them. For example, a simple code execution tool can be defined and used as follows:

The remarkable aspect of this implementation is its simplicity, we describe what a tool should do rather than implementing complex function signatures or API endpoints. GPT-5 understands the intent and generates appropriate code to fulfill the request.

Multi-Language Code Generation: Cross-Platform Development Simplified

GPT-5’s multi-language code generation capabilities address one of software development’s most persistent challenges: maintaining consistency across different programming languages and platforms. This feature enables developers to specify requirements once and receive implementations in Python, C++, Java, or other supported languages.

This capability proves invaluable for teams working with heterogeneous technology stacks or developers learning new languages. By understanding the logic and structure in their familiar language, developers can more easily transition to unfamiliar syntaxes and paradigms.

Context-Free Grammar Integration: Structured Output Control

The integration of Context-Free Grammar (CFG) support represents a sophisticated approach to ensuring output format compliance. CFG has deep roots in compiler design and formal language theory, making it an ideal tool for constraining AI output to specific structural requirements.

Advanced Tool Use Examples

MCP Integration and External Tool Connectivity

Model Context Protocol (MCP) integration represents one of GPT-5’s most powerful features for extending AI capabilities beyond the model itself. MCP provides standardized access to external systems, databases, and services, enabling natural language interactions with complex backend systems.

We completed a two-part series on for a more in-depth look at the technology. You can read part 1 and part 2 if you’d like to know more, or otherwise the example we’ve cooked up for this tutorial will teach you some basic principles as well.

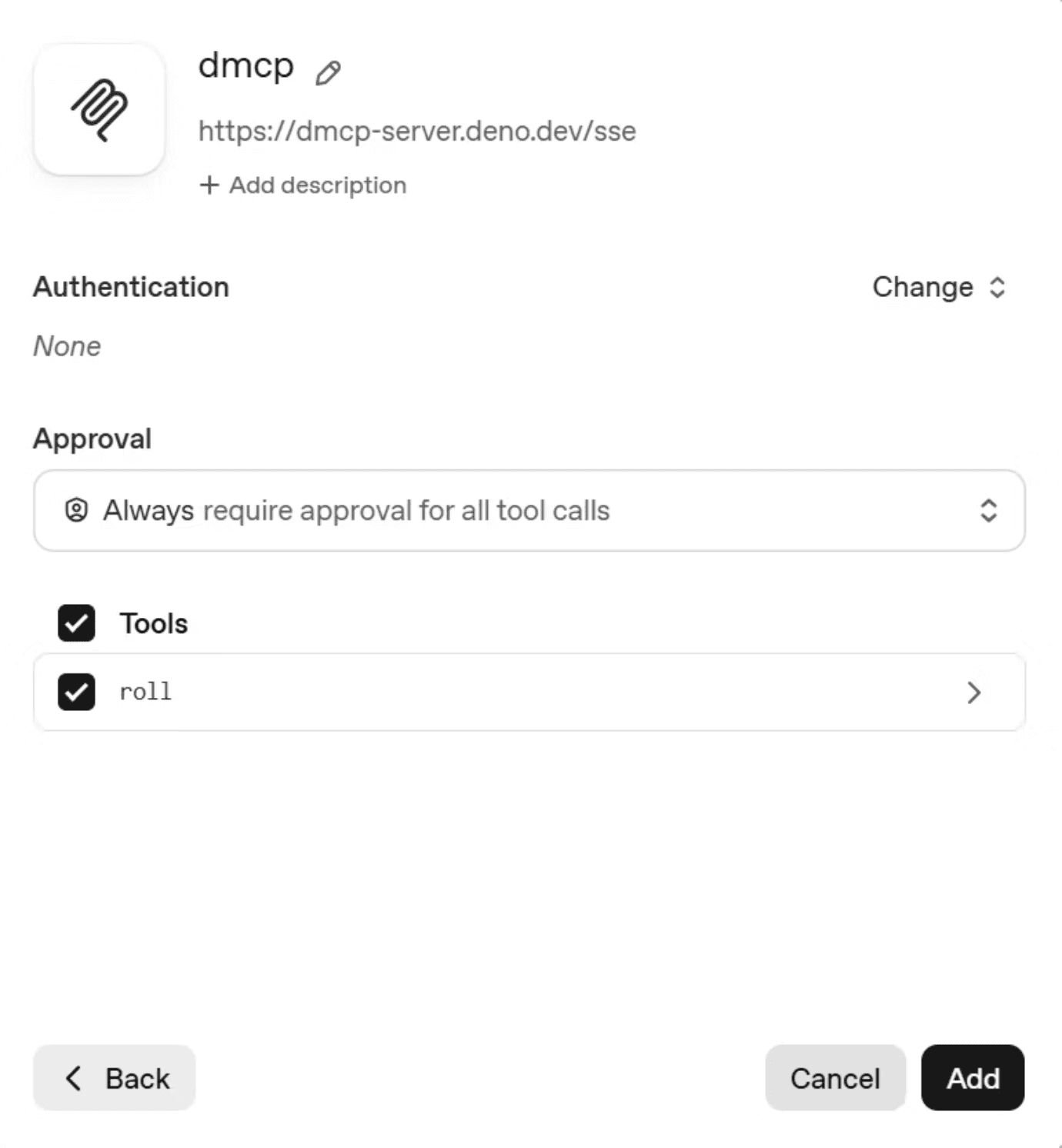

We’re going to connect to a Dungeons & Dragons server for some fun. Add an MCP Server with the (+ Add) tool button then click on the [+ Server] button in the top right. Enter ‘https://dmcp-server.deno.dev/sse’ as the URL, ‘dmcp’ as your Label, and ‘A Dungeons and Dragons MCP server to assist with dice rolling’ as your Description.

Hit [Connect] and you should receive a list of actions and in the next screen you will be receive a list of actions you can add. Make sure to check the dice roll action and click [Add].

Now in the prompt, we will ask it to roll ‘Roll 2d4+1’. In English, that means roll two 4-sided dice, add the results together, and add 1 to the total. We got 5 as our answer, but yours will vary depending on what the dice rolled. Click on those three dots one more time and let’s take a look at the code:

Dungeons & Dragons MCP Implementation

This example demonstrates how MCP servers can extend GPT-5’s capabilities into specialized domains. The Dungeons and Dragons server provides dice rolling functionality, but the same architectural pattern supports connections to business systems, databases, payment processors, and countless other external services.

Custom Tools for Enhanced Functionality

Okay, now we are going look at the ‘Custom’ option like we promised. GPT-5’s custom tool system enables sophisticated integrations that were previously impossible or extremely complex to implement. These tools fall into several categories, each serving different development needs.

Code Generation Tool

The code generation tool demonstrates the simplest form of custom tool implementation:

This may seem like an easy Hello World example, but it’s significant that you can use it to run specific code. Imagine what this can mean if you feed it structured data in a custom pipeline.

Lark Grammar Example

This code defines a math_exp tool that uses a Context‑Free Grammar (CFG) in what’s known as Lark syntax to constrain outputs. The Lark grammar enforces that valid responses follow simple arithmetic expressions built from integers, addition (+), multiplication (*), and spaces, making sure that the model emits only syntactically valid math expressions. The request asks GPT‑5 to “Use the math_exp tool to add four plus four

This code example should output ‘4 + 4’ without condensing to 8.

Regular Expression Grammar

For simpler pattern matching requirements, regular expressions provide an accessible alternative to full CFG implementations:

This example defines a custom timestamp tool constrained by a regex grammar. This says that a valid output will follow a pattern like “Month Day Year at Hour AM/PM” for example August 7th 2025 at 10AM. The code then prompts the model with: “Use the timestamp tool to save a timestamp for August 7th 2025 at 10AM.”

SQL Query Generation

For business applications, SQL generation proves invaluable for bridging the gap between natural language business questions and database queries.

Context-Free Grammar support proves particularly valuable when working with different SQL database systems, each with their own dialect-specific syntax requirements. Consider the difference between Microsoft SQL Server and PostgreSQL approaches to limiting query results:

Microsoft SQL Server:

PostgreSQL:

GPT-5’s CFG support can automatically generate dialect-specific SQL, eliminating the need for developers to manually adapt queries across different database systems.

When we ran that on our example database we received:

Your results may vary depending on your data structure, and you should adapt the call accordingly.

This capability transforms how non-technical stakeholders can interact with data systems, enabling marketing teams, business analysts, and executives to query databases using natural language descriptions of their requirements.

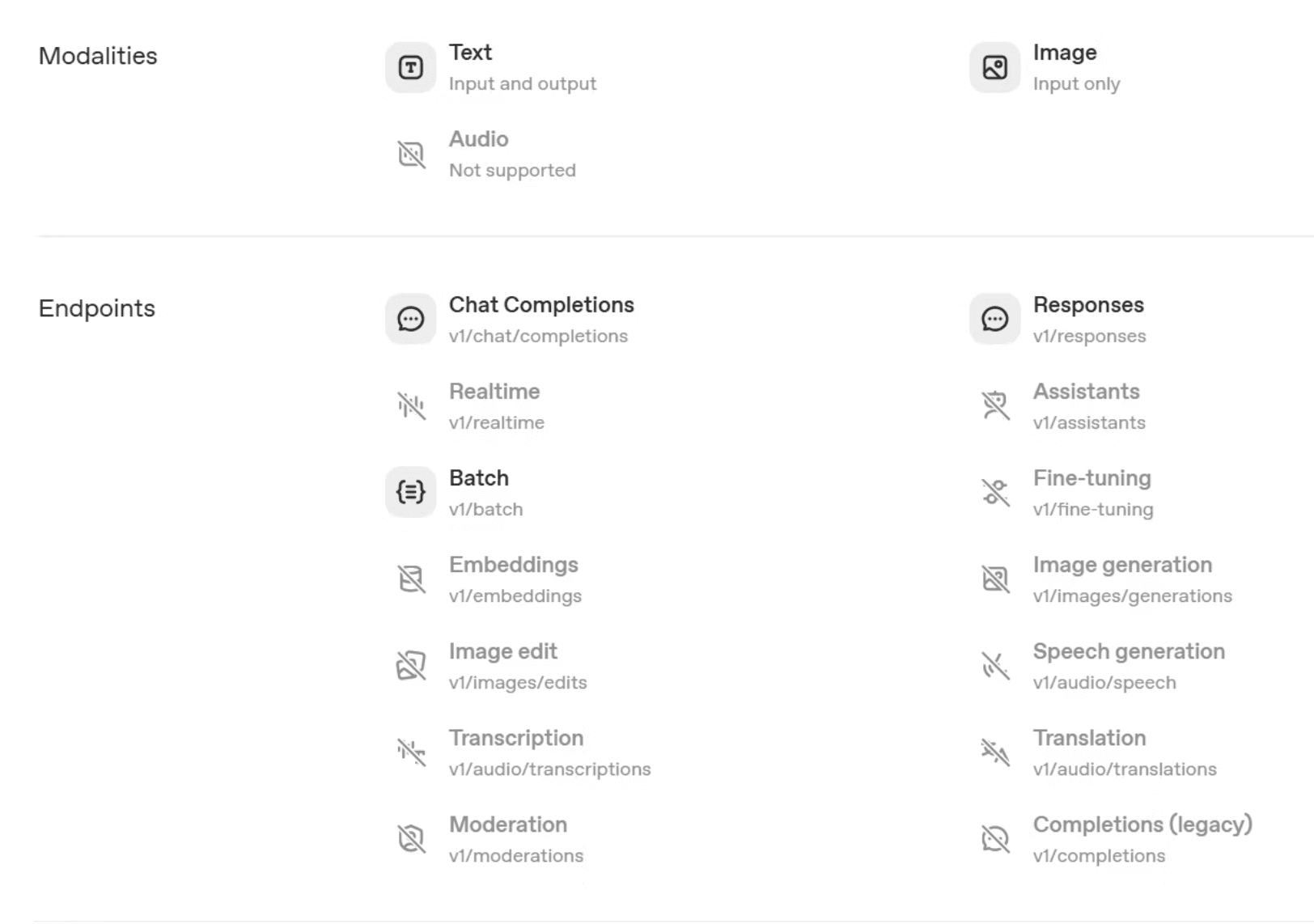

What’s Missing

We’ve covered a lot of new features for GPT-5, but model is hot off the presses, so as of the writing of this article, not everything from 3.5 and 4 is available for utilization. Noticeably, audio was left out. You can’t use it to create embeddings, assistants, or speech. You will have to use different models for that as of now.

Additionally, computer use is not available yet if you were planning to automate tasks on your local machine’s OS.

Conclusion

Congratulations on completing your grand tour introduction to GPT-5. You've learned to navigate GPT-5's architectural shift from chat completions to the responses API, implement key features like verbosity and reasoning effort controls, integrate external tools through MCP servers, and create custom tools with grammar constraints for structured outputs. With these capabilities you’ve been enabled to build sophisticated AI applications with stateful conversations, real-time data access, and precise output formatting that were previously impossible with earlier models. The moon is your ceiling now.

Additional Resources

• https://platform.openai.com/docs/models/gpt-5

• https://cookbook.openai.com/examples/gpt-5/gpt-5_new_params_and_tools

• https://github.com/openai/openai-python

• https://github.com/godfreynolan/gpt-5